Debating the ethical use of artificial intelligence

At a glance

- The AI market is expected to have an annual growth rate of 37.3% through 2030, according to Grand View Research.

- Many see AI as beneficial as it can automate such processes as data gathering and assessment, monitoring automated systems, predicting maintenance needs and more.

- Others feel skeptical of AI and worry that it may render certain jobs or skill sets obsolete, manipulate people to believe or behave a certain way, or surveil the public with little regard for privacy.

- The Bachelor of Science in Data Science and Master of Science in Data Science at University of Phoenix covers use of AI, machine learning, deep learning, and data analysis and use.

The use of artificial intelligence (AI) is on the rise. Projections by Grand View Research point to a 37.3% compound annual growth rate in the AI market between 2023 and 2030.

In practical terms, AI can look many different ways. Major corporations have already invested in developing AI systems. Meanwhile, tech firms are racing to develop technology such as artificial neural networks, which try to mimic the patterns of the human brain.

Despite the growing use of AI, many have brought up concerns about ethics and the necessary governance it engenders. After all, this is a relatively new field with far-reaching ramifications that are both helpful and concerning.

To get a better understanding of AI and its ethical debate, we sat down with Joseph Aranyosi, an associate dean of the College of Business and Information Technology at University of Phoenix.

Career-focused tech degrees aligned to the skills employers want.

How are organizations using AI?

Grand View Research found that healthcare, marketing and market research, retail and manufacturing are industries currently using responsible AI to automate and streamline processes and cut costs.

In particular, many organizations have been using AI to collect and analyze customer data, according to a 2022 report by McKinsey & Company. But consumer behavior and customer data analysis (especially in marketing) have been around for about 100 years now, so it's not really a new field or activity, Aranyosi says. However, the use of AI to auto-analyze customer data for trends, preference, and predictive modeling is relatively new. AI data analysis has been applied in various industries, such as:

- Healthcare (review patient data for potential diagnoses, medication contraindications, billing and coding)

- Finance (fraud detection, analyze customer spending habits, evaluate credit card use, bank transfers)

- Marketing (marketing analyses, SEO, customer preferences and preferred page views)

- Operations and supply chain management (tracking inventory and shipments, warehousing, evaluating resource and production costs, automated workflow adjustments)

When personal data is collected, security and guidelines for ethical use are of paramount importance. Every organization that collects customer or patient data has an ethical obligation to protect it and share it out on an as-needed basis. Most major businesses have data governance committees and policies that provide these guidelines and monitor the use of AI in data analyses, Aranyosi says.

Healthcare

According to PricewaterhouseCoopers, responsible AI has many applications in healthcare. Algorithm-powered software can assess diagnostic images and test results to find illnesses with 99% accuracy. Health information technology uses programs to analyze large amounts of patient data for insights on treatment outcomes and disease risks. However, only qualified healthcare professionals should make diagnoses. AI is just a tool they use in the evaluation process to recommend additional testing and verification.

Despite the potential benefits of responsible AI use, many patients are concerned about the potential threat to their health data security and privacy. The Pew Research Center found that, in principle, 60% of patients would be uncomfortable with their doctor using AI in their healthcare. The ethical concerns are:

- Even responsible AI systems might collect and analyze information not necessary for medical care

- Insurers could use the analysis to deny coverage

Finance

While AI programs can automate institutional-level trading, the software can automate time-consuming processes like depositing checks, onboarding new customers and processing loan applications.

Artificial intelligence can also crunch vast amounts of data and provide financial insights that can guide risk management and decision-making. Meanwhile, AI-powered financial advisors can assess investors’ needs and goals and offer advice for a fraction of the cost of a human advisor.

Joseph Aranyosi

Associate Dean, College of Business and Information Technology

Similar to other industries, AI-based finance innovations have brought serious ethical concerns about data privacy. The financial sector experienced more data breaches than any other industry, except healthcare, in 2022. The use of artificial intelligence could exacerbate that vulnerability. Also, instances of unintended bias in AI-based loan processing can make it more difficult for some disenfranchised groups to obtain credit.

Aranyosi also observes: “AI is never 100% objective since all programming is done by humans. So, if an AI is tasked to analyze data using biased standards, then it’s going to give biased results. In IT, you often hear the phrase ‘Garbage in, garbage out,’ and this definitely applies to AI. The problem is that most of these systems/processes are proprietary and not open to objective review, so it’s difficult to find and prove that such AI analyses are biased without additional scrutiny or regulatory oversight.”

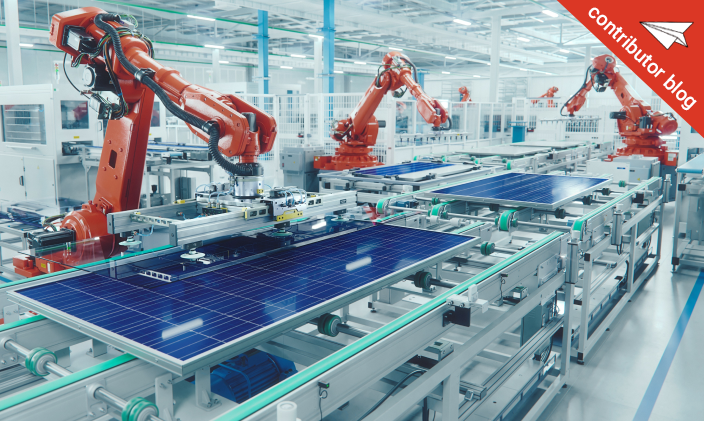

Manufacturing

AI has transformed manufacturing in several ways. The use of robotics to automate the production process was one of the first examples of artificial intelligence. Today, embedded systems can monitor automated systems, collecting data that can improve efficiency, increase quality and predict maintenance needs for equipment.

Artificial intelligence also analyzes inventory, supply chain and demand data to help manufacturers evaluate if they have enough materials to meet customer needs.

Because manufacturing-related systems require embedded software, smart machinery and complex computer systems, implementation can be expensive. Also, AI-powered equipment can make employees redundant at certain companies. Automation advances spurred by the COVID-19 pandemic could lead to 2 million lost manufacturing jobs by 2025.

According to the World Economic Forum (WEF), AI is expected to replace 85 million jobs worldwide by 2025. However, the report goes on to say that it will also create 97 million new jobs in that same time frame.

Retail

The WEF suggests AI has already brought benefits to retail and will bring more. Software for customer relationship management and marketing relies on AI to predict demand and analyze vast amounts of customer data to inform and assess marketing strategies. Because these insights come quickly with the rapid processing power of AI, marketers can get real-time insights as a campaign is unfolding.

AI can also detect fraudulent charges, monitor sensors and security systems to prevent theft and optimize operations in a way that lowers overhead for both in-person and online businesses. These lower costs could theoretically be passed along to consumers.

However, these conveniences have potential to render human employment redundant and cause job loss in the retail sector.

Criminal justice

Law enforcement agencies have always collected data on crimes and criminals to find areas to focus their efforts. New AI tools allow for enhanced data analysis for vast amounts of information.

Software can also analyze forensic evidence and provide proof from specific data, such as the sound of a gun or the layout of a crime scene. Tools like facial recognition can also help police find criminals and identify suspects.

AI in policing and criminal justice raises questions of bias and privacy issues. There are also concerns that certain types of monitoring violate privacy rights because people are recorded or have their identity checked even though they are not under suspicion.

Insurance

AI plays a major role in insurance through the automation of repetitive tasks, risk assessment and analysis, and fraud detection. AI can handle manual data collection and basic assessment tasks, support decision-making and improve risk assessment for insurance underwriters reviewing policy applications and calculating premiums.

Fraud detection is another area where AI benefits insurance companies. Algorithm-powered software can collect claims data and look for patterns of potential fraud. These systems can detect complex patterns that would escape the notice of human fraud prevention specialists.

Of course, the other side of this coin is just how much data is collected, especially without people’s knowledge or consent.

Transportation

Most of the press related to AI in transportation focuses on self-driving cars. Smart safety features have become common in cars, and partial autonomous systems improve safety on ships, trains and airplanes.

Algorithm-powered software can also help with airline scheduling and traffic congestion. It can be leveraged to find optimal routes to reduce transit time and limit carbon emissions as well.

Transportation-related AI systems can be expensive, however. For personal cars, this often means safety features are available on newer vehicles, which consumers would have to purchase. Also, newer tech still requires human oversight and, in case of malfunction, intervention.

How is responsible AI being used in everyday life?

Responsible AI is transforming business organizations and services in obvious ways, but it has also found its way into people’s everyday lives.

Aranyosi explains: “Most of us are familiar with the use of AI in the Internet of Things [IoT], such as the Siri, Alexa, or Google Assistant apps. Many home appliances are now internet-connected devices that can automate processes, share maintenance recommendations, compile grocery lists, help navigate us through traffic, etc. Apple Watch is a great example of wearable AI technology. All of these are designed to make our lives easier and keep us informed, so although there’s still the possibility for the ethical misuse of personal data, in most cases AI can be used responsibly to simplify and improve our lives.”

Here are a few examples of artificial intelligence in your home, vehicle and pocket:

- Self-driving cars and accident-avoidance systems (like automatic braking) rely on sensors that collect data from the road and steer the car away from collisions.

- Smart home systems take temperature or light readings and optimize settings without human intervention.

- Personal digital assistants and smart speakers use natural language processing systems, a subcategory of AI, to answer questions and perform tasks via voice command.

- Map applications like Google Maps read current road conditions and provide directions and route planning for users.

Although these tools might not be as sophisticated as those employed by corporations, they still offer real benefits and give rise to many of the same concerns.

Benefits of responsible AI

Whether in an organization or at an individual, personal level, responsible AI has benefits:

- The automation of mundane tasks allows workers, businesspeople and companies to focus on higher-level tasks.

- Fast processing and analysis of large amounts of data by AI algorithms give users better information with which to make decisions.

- Personalized data allows businesses and websites to show users relevant content and products, leading to a better overall user experience.

- Pattern recognition in artificial intelligence software can recognize trends in complex data sets, images or other media.

Responsible AI can also offer insights that help companies reduce costs, limit environmental impact and bring other positive attributes to their business operations.

“Good examples of responsible AI are health- and sleep-monitoring apps, which can provide us with helpful reminders, updates and recommendations for improving our exercise routines or eating patterns,” Aranyosi says.

Ethical problems associated with AI

Though ethical concerns vary according to industry and organization, there are broader concerns that affect all AI uses. These usually center on ethics.

“AI is a rapidly-growing area of research. Like most new technology, it’s not going to be perfect right out of the gate,” says Aranyosi. “Researchers, businesses, educational institutions and other organizations continue to look for ways to build better diversity, equity, inclusion and belongingness into AI tools and are actively seeking feedback from a wide variety of constituencies that can help to improve these tools through responsible use, security, safety and representation. It’s important that we continue to have healthy dialogues about the benefits and concerns of AI to ensure that we’re appropriately meeting everyone’s needs.”

Bias

UNESCO points to instances of bias in one of the world’s most commonly used artificial intelligence systems: internet search engines. Type in an image prompt for “school girls,” for example, and you’re likely to get options very different in nature than if you prompted “school boys.”

Bias can also impact the decisions AI systems make. Despite using complex algorithms and seeming to act independently, human programmers coded the software and created the algorithms. They may have added factors that favor certain types of data. This favoritism may be unintentional, but it can have a real impact when it comes to law enforcement, loan applications or insurance underwriting.

“AI development needs to include input from a wide, diverse pool of researchers, developers and users. This input needs to include representation from historically marginalized groups to ensure that we’re not unintentionally skewing the data sets and algorithms being used,” Aranyosi says.

He continues: “Objectivism, accuracy, quality assurance, feedback and other mechanisms need to be built into the development process to ensure that such unintended consequences can be addressed quickly and to everyone’s satisfaction. Although bias is an inherent part of being human, we can better address bias collectively by ensuring that everyone has a voice in the conversation and that these concerns are taken seriously to make meaningful improvements.”

Lack of transparency

AI products often have a transparency paradox. On the one hand, AI software developers may be tempted to release information about their products to prove they do not have a bias or privacy problem. On the other, doing so could expose security vulnerabilities to hackers, invite lawsuits and allow competitors to gain insights into their processes.

Surveillance

Governments and organizations use AI to assess video feeds, images and data to conduct surveillance on private citizens. These practices raise privacy and bias issues and cause concern about the power that personal data and images give to governments or companies using AI-enhanced systems.

Behavioral manipulation

With the right algorithms, a website or app can display content meant to manipulate people into developing specific opinions. In the wrong hands, this type of system has potential to control people’s opinions and spur them to take action in support of an unscrupulous leader using deepfakes and other AI-manipulated content.

Automation of work

AI allows businesses to streamline operations and automate processes. A report by Goldman Sachs found that as many as 300 million jobs worldwide could be exposed to AI automation over the next 10 years but that most of those jobs would be complemented by AI rather than replaced.

The singularity

Technological singularity is a hypothetical point in the future when tech and AI are such a part of life that they irreversibly change humanity and the world. The theory suggests that AI could take on human qualities, such as curiosity and desire, and that computerized brain implants and other advances would allow humans to develop superintelligence.

Critics write off singularity as a fantasy, but others are concerned that specific aspects of technology could irreversibly alter the world.

Ethical considerations for responsible AI

To prevent ethical dilemmas from becoming further realized, guidelines and frameworks have been proposed that aim to maintain ethical AI use in the workplace, at home and in wider society.

Informed consent

Informed consent is when medical providers give patients information about a treatment, procedure, medication or medical trial. Once they understand the potential benefits and risks, patients can decide whether to proceed.

Informed consent can extend to AI-powered systems, with physicians telling patients about the data collected, accuracy and other factors arising from AI. Patients can weigh the decision, and providers will need to respect their wishes.

Algorithmic fairness

Software makers can assess the fairness of their artificial intelligence algorithms before deploying them. The first step is to define fairness and then assess data fed into the algorithm, the algorithm itself and the results to ensure each step fits the definition.

Developers can also test their algorithm to ensure certain variables — such as age, gender or race — do not alter the results.

Data privacy

AI yields impressive results because it uses huge amounts of data. Much of this is personal data that people might want to protect and not have exposed. Hackers target sites with sensitive financial or healthcare information more than any other data type.

In addition to enhancing database security, companies and organizations can ensure algorithms use the minimum amount of identifying data or decoupling data from users before feeding it into the AI system.

Safety

AI safety involves defining potential risks and taking steps to avoid catastrophic incidents due to AI failures. The process includes monitoring performance and operations to look for malicious activity or estimating the algorithm’s level of certainty in each particular decision.

Transparency can also help with safety because it would give third parties a chance to assess the algorithms and find vulnerabilities.

The future of careers and AI

In the not-so-distant future, the integration of artificial intelligence is set to revolutionize diverse professional landscapes. From streamlining complex decision-making processes to enhancing productivity, AI is poised to become an indispensable and responsible ally in the workplace. As this technology advances, professionals in various fields can anticipate a shift in their roles, with routine tasks increasingly automated, freeing up valuable time for higher-order thinking and strategic endeavors.

The advent of responsible AI is likely to reshape how professionals approach problem-solving and innovation. Machine learning algorithms will empower individuals to leverage vast amounts of data, extracting actionable insights and fostering more informed and responsible decision-making. In this evolving landscape, there emerges a shared responsibility among both human professionals and AI systems to uphold ethical standards and ensure the responsible use of technology.

Collaboration between human expertise and the responsible capabilities of AI is expected to unlock new frontiers, pushing the boundaries of what is currently achievable within the professional realm. Moreover, the personalization of responsible AI tools is set to create tailored experiences for individuals, optimizing workflow and boosting overall efficiency. Professionals may find themselves working alongside intelligent systems that adapt to their preferences and learning styles, creating a symbiotic and responsible relationship that amplifies human potential.

While some may express concerns about job displacement, the prevailing narrative suggests a transformation rather than outright replacement. Responsible AI is poised to augment human abilities, allowing professionals to focus on tasks that require emotional intelligence, creativity, and nuanced problem-solving — areas where machines currently struggle to excel.

As the future unfolds, the integration of responsible AI into various careers holds the promise of unlocking unprecedented opportunities for growth, innovation and professional fulfillment while emphasizing the ethical and responsible use of these powerful technologies.

Data science at University of Phoenix

Aranyosi notes that University of Phoenix’s data science degrees “cover AI, machine learning, deep learning, and data analysis and use. Many of our IT degrees include content on programming, software development, database administration, networking, data standards, security, ethics and other related topics.”

If a career in data science interests you, learn more about the Bachelor of Science in Data Science and Master of Science in Data Science at University Phoenix. There are also other online programs in information technology to consider if you’re seeking foundational IT knowledge.

ABOUT THE AUTHOR

A graduate of Johns Hopkins University and its Writing Seminars program and winner of the Stephen A. Dixon Literary Prize, Michael Feder brings an eye for detail and a passion for research to every article he writes. His academic and professional background includes experience in marketing, content development, script writing and SEO. Today, he works as a multimedia specialist at University of Phoenix where he covers a variety of topics ranging from healthcare to IT.

want to read more like this?